"Does Dante support failover and load balancing?"

Posted by Inferno Nettverk A/S, Norway on Mon Nov 6 23:02:41 MET 2017We have sometimes been asked by customers if the Dante server supports fault tolerance and load balancing. It has always been a difficult question to provide a simple answer to, since fault tolerance and load balancing is typically achieved by installing multiple Dante servers in a configuration that provides some form of redundancy using either software or hardware external to Dante, rather than functionality found in Dante itself.

A better way to phrase the question is "how can I create a fault tolerant (or load-balanced) Dante installation?". To answer this and other related questions we have created a new page on redundancy that goes into some theoretical and practical detail on different ways to achieve redundancy, and how Dante will work in these different configurations.

With Dante supporting both outgoing TCP connections (SOCKS CONNECT), incoming TCP connections (SOCKS BIND), and both incoming and outgoing UDP traffic (SOCKS UDP ASSOCIATE), that might and might not have data encryption enabled, there is some potential complexity that should probably be considered when setting up a redundant Dante configuration. The new page on redundancy examines these issues. This posting will not repeat all the details in that document, but instead attempt to provide some commentary on the usability of Dante in a fault tolerant or load-balanced configuration, and give a simple example of how this can be done.

Support for redundancy in Dante

So, does Dante support fault tolerance and load balancing? The way Dante is designed, it works perfectly well with various solutions that provide this type of functionality, one just needs to set up Dante and whatever load balancing/fault tolerance solution one chooses to use.

Kerberos based GSSAPI authentication and data encryption, supported by Dante, might seem to be potentially problematic since machines in a Kerberos network will typically need to have a reverse mappable IP-address. When multiple Dante servers are running on multiple machines, potentially all with distinct IP-addresses that are different from the Dante server address that the client thinks it communicates with, getting everything to work correctly may sound difficult. However, even this scenario works well thanks to some special functionality in Dante.

SOCKS UDP, which requires two independent communication channels to the same SOCKS server, might seem like another potential pitfall. However, UDP also works, though it may require some awareness of how the failover/load balancing mechanism is implemented, especially if any packet filtering is done between the SOCKS clients and the Dante server.

Several redundancy/load balancing systems are available today, both commercial and open source. We have tested and provided some simple example configurations for Dante when using CARP, relayd(8), or Clusterlabs/Pacemaker. These solutions are all implemented differently and work at different network levels: layer 2 (ARP), layer 3 (IP), or layer 4/7/"socket-layer", and thus should hopefully provide a good starting point also for integration with other systems not specifically tested by us. If you use the Dante server with another system, feel free to leave a comment below describing how you have configured things and how it worked.

A simple Dante active/passive failover configuration using Pacemaker

To show how a active/passive failover configuration can be made for Linux, we will give an example using the Clusterlabs/Pacemaker system.

Please see the Dante redundancy page for more information about other systems.

Pacemaker can be configured to use active/passive failover, where one node is named the active node, and another node is named the passive node. The active node handles all traffic, but if the active node suffers a failure, the passive node will take over and become the new active node.

With Pacemaker, the shared IP-address and running Dante server will only be active on one of the nodes at a time. When the backup takes over, the shared IP-address is first configured on the backup node, and only after this has been done is the Dante server started up. Since only one node at a time is using the shared IP-address and running a Dante server, this gives a simple and easily understandable redundant system.

First, Dante must be installed on both nodes, for example, using a configuration like the one below. Here IP-SHARED is the address the SOCKS client will communicate with and IP-DANTEEXT is the external address of Dante.

Both nodes can use identical, or almost identical, Dante configurations. The only value that might need to be different is the external IP-address used by Dante (IP-DANTEEXT/IP-DANTEEXT2). If the external IP-address used by Dante is the same on both nodes, the Dante configuration will probably be identical on both nodes.

errorlog: syslog

logoutput: /var/log/sockd.log

internal: IP-SHARED

external: IP-DANTEEXT #IP-DANTEEXT2 for second node

client pass {

from: 0/0 to: 0/0

log: error connect disconnect

}

socks pass {

from: 0/0 to: 0/0

log: error connect disconnect

}

With Dante installed, Pacemaker can be configured. A full description of all the steps involved in setting up a Pacemaker based cluster is outside the scope of this document, but general configuration is well documented elsewhere, including at these pages: clusterlabs.org Quickstart and RHEL High Availability Add-On configuration page

To use Pacemaker with Dante, an OCF Resource Agent script must also be installed, and one can be downloaded from here: dante.ocf

This resource agent uses process based monitoring of the Dante SOCKS server, by verifying that the pid stored in the server pid file is actually running. The following three optional arguments are available:

- 'sockd' - The server binary (defaults to /usr/sbin/sockd).

- 'sockd_config' - The server configuration file (defaults to /etc/sockd.conf).

- 'sockd_pidfile - The server pid file (defaults to /var/run/sockd.pid).

To install the resource agent (assuming resource files are found in the /usr/lib/ocf/resource.d directory), create the sub-directory /usr/lib/ocf/resource.d/dante and install the Dante OCF file there as sockd.

With the OCF file and Dante installed on all cluster nodes, and initial Pacemaker configuration completed, the Dante related resources can be configured. The cluster will first need a shared IP-address (IP-SHARED, here 10.0.0.1, netmask 8) that can be used by the Dante server on the active node:

pcs resource create FailoverIP ocf:heartbeat:IPaddr2 ip=10.0.0.1 cidr_netmask=8 op monitor interval=30s

The sockd.conf file is then configured with this IP-address as the internal address (IP-SHARED) on each node:

internal: 10.0.0.1 port = 1080

If the external address (IP-DANTEEXT) is also set to the shared IP-address, traffic passing through the SOCKS server will appear identical for both nodes, with the same addresses as endpoints, regardless of which node is active:

external: 10.0.0.1

Alternatively, a unique external address can be configured for each node, in which case the external keyword will likely need to be different on each node.

With the Dante servers configured, it should be possible to create a Dante server resource, for example, using these commands:

pcs resource create SOCKDfailover ocf:dante:sockd op monitor interval=30s

Or with all options specified:

pcs resource create SOCKDfailover ocf:dante:sockd \

sockd=/usr/local/sbin/sockd-1.4.2 \

sockd_config=/etc/sockd-failover.conf \

sockd_pidfile=/var/run/sockd-failover.pid op monitor interval=30s

The shared IP-address and the Dante server resources then need to be grouped together to ensure that they are always running on the same node at the same time:

pcs resource group add SOCKD_failover FailoverIP SOCKDfailover

Finally, the status can be queried to verify that all resources are running correctly.

pcs status

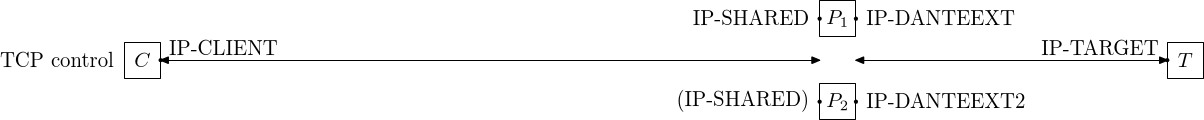

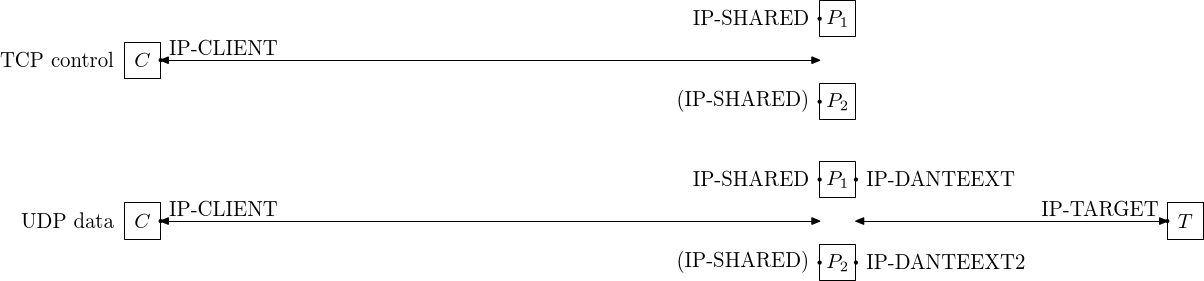

The two figures below show how communication in the resulting configuration will be structured, for the different SOCKS commands.

For TCP CONNECT and BIND:

For UDP ASSOCIATE:

For other fault tolerant and load-balanced configurations, please consult the main page on redundancy in Dante.

In conclusion

So, does Dante support fault tolerance and load balancing? I guess we might say that Dante supports both fault tolerance and load balancing, when integrated in systems that provide fault tolerance and load balancing.

That is not to say that there is no room for improvements in Dante. For example, in some configurations it might be desirable to be able to control the IP-address the Dante server uses to receive UDP traffic from the SOCKS client on, which is not possible at the moment. This address will default to the address the SOCKS request is received on and should be correct in most situations, but it might still be beneficial to be able to change it. Apart from this, the OCF file for Clusterlabs/Pacemaker can be improved by adding support tools for detecting a wider range of potential server failures. Both of these areas are likely to be improved in future versions of Dante if customers request it.

Another, more exotic, way to interpret "Does Dante support fault tolerance" is, "does Dante recover from node failures?", as in, will it be able handle the loss of a machine in a cluster without the loss of active client sessions?

The answer to this is currently "no". This is functionality it might be possible to implement on some platforms, but it would require platform/system specific code in Dante. This could possibly be an interesting project, but is not something planned or requested by any customer at the moment.

Comments:

Add comment:

To add a public comment to this article, please use the form below.

For information on how we process and store information submitted via this form, please see our privacy policy for blog comments.