Layer 3-based redundancy

This page describes how Dante can be configured for layer 3-based redirection. For a general overview of fault tolerance and load balancing in Dante, see the main page on redundancy.

Layer 3 redirection

Failover and load balancing can also be implemented on layer 3, by having a relay rewrite and forward packets to the nodes in the cluster (potentially also layer 4 if TCP/UDP header fields are changed). The OpenBSD relayd(8) application is an example of this, integrating with the kernel level PF packet filter, which handles the actual packet forwarding and header rewriting.

The relayd(8) application works by having a pool of servers (IP addresses/hostnames and a port number) that the application can be configured to monitor on regular intervals. Based on monitoring results, machines are either added or removed from the pool of servers. The shared IP-address will in this configuration be located on the relayd(8) node and packets to this IP-address are redirected to the machines in the server pool, based on the configured server selection algorithm.

For this type of redirection system, depending on the behaviour of the server selection algorithm, a fault-tolerant or load-balanced Dante installation can be achieved. The relayd(8) application specifically appears to currently only support variations of active/active load-balanced configurations, but there should be nothing that prevents layer 3 redirection from being used to create active/passive fault-tolerant systems.

If the redirection machine can also be installed in a fault-tolerant configuration, the relay becoming a single point of failure for the system as a whole can be avoided.

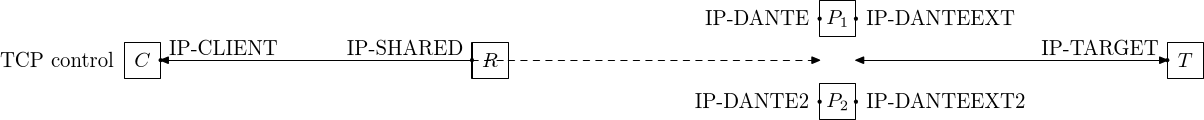

The connection endpoints in a layer 3 shared IP redirection fault-tolerant/load-balanced configuration are shown in the figure above, for the TCP based commands: CONNECT and BIND. In contrast with layer 2 shared IP-address configurations, there is now a relay node (R) that has the IP-SHARED address that the client has as its peer IP-address. There are two separate connections, from the client to the relay, and via it to the proxy, and from the proxy to the target server. The relay redirects traffic to the IP-SHARED IP-address and the Dante servers are bound to the distinct IP-DANTE/IP-DANTE2 and IP-DANTEEXT/IP-DANTEEXT2 addresses.

Depending on which node is communicating with a client, the following two connection pair alternatives exist:

- P1 used: IP-CLIENT to IP-SHARED (to IP-DANTE) and IP-DANTEEXT to IP-TARGET

- P2 used: IP-CLIENT to IP-SHARED (to IP-DANTE2) and IP-DANTEEXT2 to IP-TARGET

Seen from the client, only the IP-DANTEEXT/IP-DANTEEXT2 keywords are different in the two configurations. The actual endpoints for communication with the clients are the IP-DANTE and IP-DANTE2 addresses, but due to redirection at the relay, these addresses are not visible to the client when CONNECT or BIND are used (as indicated by the dashed line in the figure).

IP-DANTEEXT and IP-DANTEEXT2 will likely need to be different IP-addresses since there with layer 3 redirection is no shared address mechanism on the Dante server nodes.

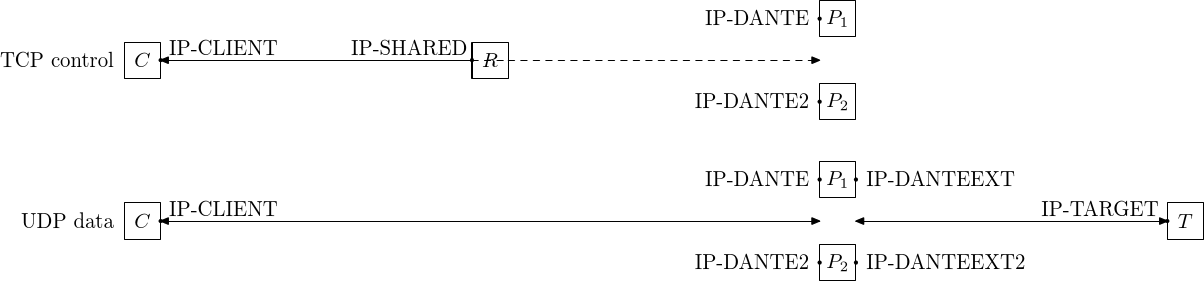

For UDP traffic, the socket endpoints are shown in the figure above, and in addition to the TCP control connection, there are also two separate UDP data socket pairs. In contrast with the layer 2 shared IP-address mechanisms, for layer 3 redirection the client communicates directly with the IP-DANTE/IP-DANTE2 addresses at the proxy machines when sending UDP data. This is due to the Dante server currently always using the same address as the TCP control connection was received on to receive UDP data. Since the Dante servers are bound to IP-DANTE/IP-DANTE2 and not IP-SHARED, the target address for UDP traffic returned to the clients will be the address that the server is bound to, resulting in UDP traffic going directly between the clients and the Dante servers and not via the relay.

With regards to load balancing, this should not be a problem. Load balancing is achieved by redirection of the TCP control connections and since the UDP data goes to the same machine as the control connection, traffic will still be load-balanced. Depending on how routing is done, this might also be a positive since the load on the relay will be reduced.

As for routing/firewall rules, this means that the IP-DANTE/IP-DANTE2 addresses must be reachable by the clients since UDP traffic needs to go directly. Direct communication via TCP is not necessary and direct TCP traffic between the clients and Dante servers can be dropped.

Example Dante configuration

A minimal Dante configuration can look like this (first Dante server):

errorlog: syslog

logoutput: /var/log/sockd.log

internal: IP-DANTE #IP-DANTE2 for second node

external: IP-DANTEEXT #IP-DANTEEXT2 for second node

client pass {

from: 0/0 to: 0/0

log: error connect disconnect

}

socks pass {

from: 0/0 to: 0/0

log: error connect disconnect

}

Example relayd(8) configuration

The following relayd(8) configuration will create a pool of two servers that are forwarded to, with server selection done based on the source IP-address. UDP traffic goes directly to the Dante servers, so the server selection algorithm does not need to take UDP traffic into consideration. The external address of the Dante servers will still be different for each host so using a source IP-address based hashing algorithm will reduce the possibility of client application interoperability problems (see section above on external address variation for more details).

/etc/relayd.conf:

#public address for load-balanced SOCKS server

relayd_addr="10.0.0.1" #IP-SHARED

relayd_port="1080"

relayd_int="em0"

#server availability check interval

interval 5

#actual SOCKS server addresses and port number

table <socksservers> { 10.0.1.2, 10.0.1.3 } #IP-DANTE, IP-DANTE2

servers_port="1080"

#redirection rule, using source-hash algorithm

redirect "socksbalance" {

listen on $relayd_addr port $relayd_port interface $relayd_int

forward to <socksservers> port $servers_port mode source-hash check tcp

}

It is also necessary to configure PF:

/etc/pf.conf:

anchor "relayd/*"

The above configuration results in relayd(8) maintaining a list of server addresses in the "socksservers" table. Packets for 10.0.0.1 port 1080 will be forwarded to either 10.0.1.2 or 10.0.1.3. The availability of each Dante server is checked every 5 seconds by attempting to open a TCP connection to the address/port number the SOCKS servers are supposed to be listening at (10.0.1.2 and 10.0.1.3 port 1080).

This simple check does not verify that connections can be forwarded, but it will detect the machine or SOCKS server going down. The source-hash mode appears to provide enough consistency in forwarding to avoid problems with requests from the same host being forwarded to a new SOCKS server for each request.

Note that the above configuration is just an example, please consult the relayd.conf(5) manual for details and alternative configurations; there are many different ways to configure relayd(8).

For an example of how a more extensive check can be done by relayd(8) to see if the Dante servers are not only listening for connections, but actually forwarding requests, see the layer 4 redirection page.