Layer 2-based redundancy

This page describes how Dante can be configured for layer 2-based failover and load balancing. For a general overview of fault tolerance and load balancing in Dante, see the main page on redundancy.

Layer 2 shared IP failover fault tolerance

Layer 2 shared IP failover systems can be implemented in different ways, but will typically work by having a single IP-address that is shared among all nodes in the system. A master server answers replies to traffic sent to the shared IP-address by default, with a backup server taking over if the master server goes down.

It is possible to create a fault-tolerant Dante configuration using this type of system. All nodes would have the same Dante configuration, using the same shared IP-address as the internal SOCKS server address, with potentially only the external address being different on the two machines. If the master node goes down, the Dante server on the backup node would take over and receive subsequent requests.

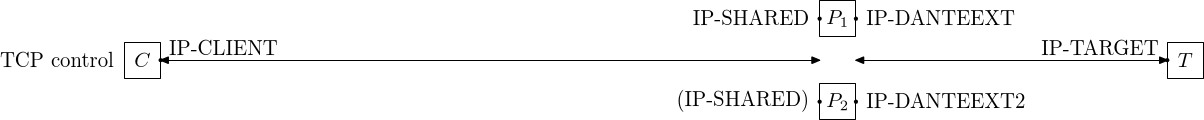

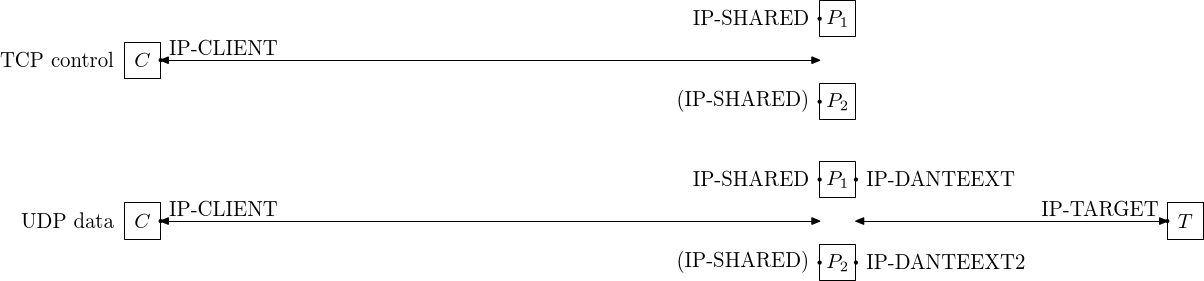

The connection endpoints in a layer 2 shared IP failover configuration are shown in the figure above, for the TCP based commands: CONNECT and BIND. There are two separate connections, from the client to the proxy, and from the proxy to the target server. The Dante servers bind to the IP-SHARED address, with the master being active (P1) and the backup server (P2) being passive.

Depending on which node is active, the following two connections pair alternatives exist:

- Master active: IP-CLIENT to IP-SHARED and IP-DANTEEXT to IP-TARGET

- Backup active: IP-CLIENT to IP-SHARED and IP-DANTEEXT2 to IP-TARGET

Only the IP-DANTEEXT/IP-DANTEEXT2 keywords are different in the two configurations, and depending on which address is used for IP-DANTEEXT/IP-DANTEEXT2, the two values might be identical. If the IP-SHARED address, or a similar shared address, is used also on the external Dante interface, the two values can be identical since the address is only used to send and receive traffic by one of the machines at any time.

For UDP traffic, the socket endpoints are shown in the figure above, and in addition to the TCP control connection, there are also two separate UDP data socket pairs. Of note is that for the layer 2 shared IP configuration, the IP-SHARED address is used consistently by the Dante server for both the TCP control connection and the UDP data sockets. As with the TCP CONNECT/BIND commands, the external UDP address will be either IP-DANTEEXT or IP-DANTEEXT2, depending on the host in use, but the values can be identical due to only one node being active at a time.

Failover systems using layer 2 fault tolerance include CARP and Clusterlabs/Pacemaker. Both of these provide similar functionality, implemented slightly differently. Using CARP, a virtual network interface will be configured with the shared IP-address on both the master and backup nodes, with only the master actually answering traffic to the shared IP-address. With the Clusterlabs/Pacemaker solution, only the master node has the shared IP-address configured. Seen from application, with CARP Dante is always running on all nodes, but only receives requests on the node that is currently the master. With Clusterlabs/Pacemaker, the Dante server is only running on the master server, and only started on a backup node when that node becomes active. The server on the master node is terminated when the node stops being the master/active node.

Example Dante configuration

A minimal Dante configuration can look like this (first Dante server):

errorlog: syslog

logoutput: /var/log/sockd.log

internal: IP-SHARED

external: IP-DANTEEXT #IP-DANTEEXT2 for second node

client pass {

from: 0/0 to: 0/0

log: error connect disconnect

}

socks pass {

from: 0/0 to: 0/0

log: error connect disconnect

}

Example CARP configuration

A two machine master/backup failover configuration can be created using CARP with the following configuration:

#carp setup - failover configuration - (run on master server) ifconfig carp0 10.0.0.1/8 carpdev em0 vhid 1 #carp setup - failover configuration - (run on backup server) ifconfig carp0 10.0.0.1/8 carpdev em0 vhid 1 advskew 100

Here 10.0.0.1 corresponds to IP-SHARED and is configured on both nodes. Note that this CARP configuration is only meant as an example, please consult the carp(4) man-page for details on setting up CARP nodes.

No special configuration is needed on the Dante server; Dante can be configured as if no redundancy is present, with either the CARP interface or the shared IP-address as the internal interface on both machines:

internal: carp0 #failover interface

The other lines can be as above, and this configuration will give a system where the backup server takes over when the master server goes down.

Dante failure and monitoring

The CARP based failover mechanism is handled on the IP-level; the CARP configuration above, by itself, does not address failure on the process level. If the Dante processes on the master server terminate while the master server is still running, the backup server will not take over and client requests will be dropped. An additional mechanism is needed, such as a monitoring process that will take down the carp interface if the Dante processes stop working.

The following is a simple example of how this can be done. The script uses maxconn.pl to check if Dante is running, and will ensure that the CARP interface is taken down if Dante is not running correctly, and that the CARP interface is up if Dante appears to be working correctly. The script should run on both the master and the backup nodes.

APP="loopcarpcheck.sh"

DELAY=5 #seconds between checks

SOCKSSERVER=10.0.0.1

SOCKSPORT=1080

CARPIF="carp0"

#initial state: assume running

SERVOK=1

ifconfig $CARPIF up

while true; do

maxconn.pl -s "$SOCKSSERVER:$SOCKSPORT" -b 127.0.0.1 -c 1 -E 5

if test $? -ne 0; then

#server appears to be down/non-functional

if test x"$SERVOK" = x1; then

#state was ok, take down carp interface

echo "$APP: server appears down, shutting down $CARPIF"

SERVOK=0

ifconfig $CARPIF down

fi

else

#server appears to running correctly

if test x"$SERVOK" = x0; then

#state was failure, take up carp interface

echo "$APP: server seem ok, taking up $CARPIF"

SERVOK=1

ifconfig $CARPIF up

fi

fi

sleep $DELAY

done

The above is a simple script that does not attempt to address many potential issues, such as attempting to restart the Dante server. For a more complex solution that also handles this, a system like Clusterlabs/Pacemaker can be used.

Example Clusterlabs/Pacemaker active/passive configuration

Clusterlabs/Pacemaker can be configured to use active/passive failover. In this type of configuration, the IP-address and running Dante server is only active on one of the nodes at a time. When the backup takes over, the shared IP-address is first configured on the backup node, and only after this has been done is the Dante server started up.

A full description of all the steps involved in setting up a fully configured Pacemaker based cluster is outside the scope of this document. General configuration is also well documented elsewhere, including at these pages: clusterlabs.org Quickstart and RHEL High Availability Add-On configuration page

The rest of this section assumes that a Pacemaker cluster has been created and nodes have been added. Examples are provided for commands to create and configure the shared IP-address and the Dante resources needed for a failover Dante configuration.

To use Pacemaker with Dante, an OCF Resource Agent script must first be installed, and can be downloaded from here: dante.ocf

The resource agent uses process based monitoring of the Dante SOCKS server, by verifying that the pid stored in the server pid file is actually running. The following three optional arguments are available:

- 'sockd' - The server binary (defaults to /usr/sbin/sockd).

- 'sockd_config' - The server configuration file (defaults to /etc/sockd.conf).

- 'sockd_pidfile - The server pid file (defaults to /var/run/sockd.pid).

To install the resource agent (assuming resource files are found in the /usr/lib/ocf/resource.d directory), create the sub-directory /usr/lib/ocf/resource.d/dante and install the Dante OCF file there as sockd.

With the OCF file, Dante installed on all cluster nodes, and initial Pacemaker configuration done, the Dante related resources can be configured. The cluster will first need a shared IP-address that can be used by the Dante server on the active node:

pcs resource create FailoverIP ocf:heartbeat:IPaddr2 ip=10.0.0.1 cidr_netmask=8 op monitor interval=30s

The sockd.conf file is then configured with this IP-address as the internal address on each node:

internal: 10.0.0.1 port = 1080

If the external address is also set to the shared IP-address, traffic passing through the SOCKS server will appear identical for both nodes, with the same addresses as endpoints, regardless of which node is active:

external: 10.0.0.1

Alternatively, a unique external address can be used, in which case the external keyword will be different on each node.

With the Dante servers configured, it should be possible to create a SOCKS server resource, for example:

pcs resource create SOCKDfailover ocf:dante:sockd op monitor interval=30s

Or with all options specified:

pcs resource create SOCKDfailover ocf:dante:sockd \

sockd=/usr/local/sbin/sockd-1.4.2 \

sockd_config=/etc/sockd-failover.conf \

sockd_pidfile=/var/run/sockd-failover.pid op monitor interval=30s

The shared IP-address and the Dante server resources then need to be grouped together to ensure that they are always running on the same node at the same time:

pcs resource group add SOCKD_failover FailoverIP SOCKDfailover

Finally, the status can be queried to verify that all resources are running correctly.

pcs status

Layer 2 shared IP load balancing

A layer 2 shared IP load balancing system can be implemented by all nodes sharing the IP-address receiving all traffic to the address, but with only one of the nodes actually processing and replying to traffic from any given client. A hash algorithm is typically used at each node to determine if any given packet should be processed or ignored.

Dante can be configured in this type of system by having the Dante server run on all nodes having the shared IP-address, with Dante configured on each node to receive SOCKS requests on the shared IP-address.

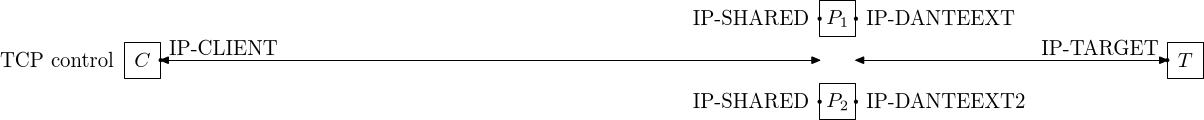

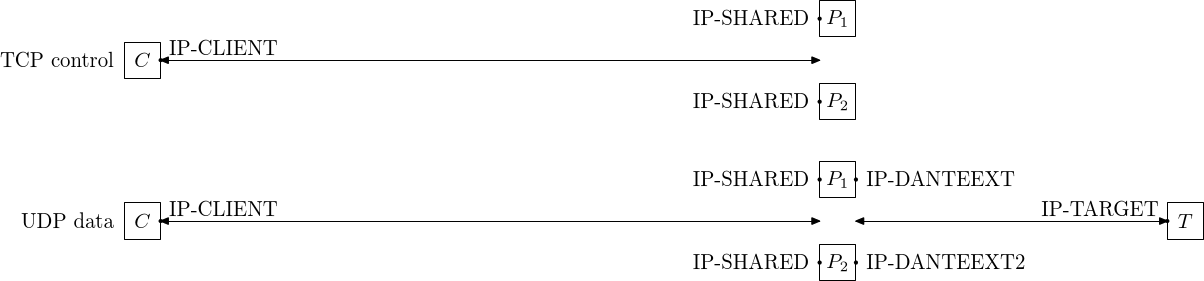

The connection endpoints in a layer 2 shared IP load-balanced configuration are shown in the figure above, for the TCP based commands: CONNECT and BIND. There are two separate connections, from the client to the proxy and from the proxy to the target server. Dante is bound to the IP-SHARED address, with the master being active (P1) and the backup server (P2) being passive.

Depending on which node a client is communicating with, the following two connections pair alternatives exist:

- P1 used: IP-CLIENT to IP-SHARED and IP-DANTEEXT to IP-TARGET

- P2 used: IP-CLIENT to IP-SHARED and IP-DANTEEXT2 to IP-TARGET

Only the IP-DANTEEXT/IP-DANTEEXT2 keywords are different in the two configurations, but in contrast with a layer 2 shared IP-address failover configuration, IP-DANTEEXT and IP-DANTEEXT2 will likely need to have different IP-addresses due to limitations in how shared IP-address load-balanced systems work. They will typically support receiving traffic to the shared IP-address, but initiating data transfers from a shared IP-address will not be supported and would result in incoming traffic for transfers that attempt this being processed at a different node than the one that initiated the transfer.

For UDP traffic, the socket endpoints are shown in the figure above, and in addition to the TCP control connection, there are also two separate UDP data socket pairs. Of note is that for the layer 2 shared IP configuration, the IP-SHARED address is used consistently by the Dante server for both the TCP control connection and the UDP data sockets. As with the TCP CONNECT/BIND commands, the external UDP address will be either IP-DANTEEXT or IP-DANTEEXT2, depending on the Dante server used. As noted above, in contrast with layer 2 shared IP-address failover configurations, using a shared IP-address for IP-DANTEEXT/IP-DANTEEXT2 might not be supported by typical load balancing systems.

CARP and Clusterlabs/Pacemaker also support shared IP-address load balancing, and can be used for load balancing between multiple nodes in a cluster that share the same IP-address. With these systems, each machine simultaneously run a separate Dante server and load balancing would is done on the kernel level, with filtering based on packet header values such as the source IP-address.

Example Dante configuration

A minimal Dante configuration can look like this (first Dante server):

errorlog: syslog

logoutput: /var/log/sockd.log

internal: IP-SHARED

external: IP-DANTEEXT #IP-DANTEEXT2 for second node

client pass {

from: 0/0 to: 0/0

log: error connect disconnect

}

socks pass {

from: 0/0 to: 0/0

log: error connect disconnect

}

Example CARP configuration

A two machine load-balanced Dante setup can be created using CARP with the following configuration:

#carp setup - load balancing configuration - (run on server 1) ifconfig carp1 10.0.0.1/8 carpdev em0 carpnodes 1:0,2:100 balancing ip-stealth #carp setup - load balancing configuration - (run on server 2) ifconfig carp1 10.0.0.1/8 carpdev em0 carpnodes 1:100,2:0 balancing ip-stealth

This CARP configuration is only meant as an example, please consult the carp(4) man-page for details on setting up CARP nodes. Depending on the network switch the machines are connected to, a different configuration might be needed.

No special configuration is needed on the Dante server; Dante can be configured as if no redundancy is present, with either the CARP interface or the shared IP-address as the internal interface on both machines:

internal: carp0 #failover interface

Example Clusterlabs/Pacemaker active/active configuration

A Clusterlabs/Pacemaker load balancing configuration can be configured to work in the same way as CARP, with the shared IP and SOCKS server resources cloned to multiple cluster nodes. All nodes will then simultaneously have the same IP-address and "sourceip" hashing can be used give persistent client to server node mapping across requests.

For information on initial Pacemaker configuration, see the description of Pacemaker in the active/passive configuration section above; the commands below assume that the cluster has been created, nodes have been added and that the Dante OCF Resource Agent script has been installed.

To add the Dante server and shared IP-address resources for a shared IP-address load-balanced system, the following commands can be used:

##create resources - (loadbalance, cloned)

pcs resource create SharedIP ocf:heartbeat:IPaddr2 ip=10.0.0.1 \

cidr_netmask=8 op monitor interval=30s

pcs resource create SOCKDloadbalance ocf:dante:sockd op monitor interval=30s

#clone address to make it run on all nodes, with sourceip hashing

pcs resource clone SharedIP globally-unique=true clone-node-max=1

pcs resource update SharedIP clusterip_hash=sourceip

#clone sockd service

pcs resource clone SOCKDloadbalance

#ensure address is configured before sockd is started

pcs constraint order SharedIP-clone then SOCKDloadbalance-clone

No special configuration is needed for the Dante servers. The internal address field in sockd.conf file should be configured to use the shared IP-address:

internal: 10.0.0.1 #shared load-balance IP

The external Dante interface will need to use a different address, one that is distinct at each node in the cluster, either by specifying an IP-address directly or by using a interface name with an address that is different on each cluster node. Apart from the external address, all nodes can have the same Dante server configuration.