Redundancy: Fault tolerance and load balancing

This page describes how Dante can be configured for fault tolerance and load balancing.

There are various ways in which Dante can be installed in fault-tolerant and load-balanced configurations. The document describes tradeoffs that exist depending on how the redundancy mechanism, which will likely be external to Dante, is implemented.

We do not wish to make any official recommendation for specific systems, but rather provide examples on how a few different systems can be use to implement Dante in a fault-tolerant and/or load balancing environment.

The examples can be used as starting points for building redundant Dante configurations, but we recommend that manuals for each specific system are consulted, rather than copying these examples directly.

Customers are also welcome to contact us via the support email or support phone number for assistance or further tips and recommendations for their particular environment and use.

Quick Summary

Dante can be used in various failover and load balancing configurations, including setups using tools such as CARP, relayd(8), and Clusterlabs/Pacemaker.

For a quick overview without too many details on how Dante can be configured in a redundant configuration, please take a look at our blog post on redundancy support in Dante.

For a more detailed overview, this page gives some general background information. Information on specific configurations can be found in these sub-pages:

- Process-level redundancy

- Layer 2-based failover and load balancing

- Layer 3-based redirection

- Layer 4/7-based redirection/forwarding

There are benefits and drawbacks to each approach, and no one single optimal method to achieve redundancy. The appropriate solution will in many cases be determined by other factors, such as the operating system in use and the customers usage scenario.

For Linux variants, the layer 2 page provides information on how to configure Clusterlabs/Pacemaker based solutions. The other approaches described are largely based on software available primarily on BSD variants (CARP/relayd), but all pages attempt to describe the approach in a general way so that the information can be useful also when using similar products that are not described here.

Overview

In server systems, both fault tolerance and load balancing typically imply an element of redundancy. In the case of fault tolerance, it can be implemented with two machines by letting a backup server take over if the primary server fails. In the case of load balancing, it can mean spreading the server load among multiple simultaneously running machines. A load-balanced system can also be fault-tolerant, if the system is able to handle the failure of one or more of the load-balanced nodes.

A simple Dante installation might use a single machine, running a single instance of Dante, without any fault tolerance or load balancing. For this type of system we can define the following potential failure states:

Hardware/OS failure leading to inability to forward traffic.

If a machine goes down completely, or some necessary component fails, the machine might become completely unavailable, or operate at significantly reduced performance.

Dante process termination leading to inability to handle new/existing clients.

If Dante crashes or some other fatal bug is triggered, Dante may become unable to handle provide any further service until restarted.

Load sufficiently high to lead to reduced per-session performance due to hardware/OS/Dante scalability limitations.

An optimal system will scale linearly with the number of requests and the amount of traffic received, while a system with resource limitations and bottlenecks that are low enough to be relevant will result in reduced performance when the client load becomes too high, the number of clients is too high, or if the server configuration is too complex.

This is not an exhaustive list of possible failure states, but represents the primary motivators for adding some form of redundancy, with the goal of improving fault tolerance, improving scalability, or both.

For Dante, the possibility of adding some form of redundancy exists in both the SOCKS client and the SOCKS server. A SOCKS client will typically have the SOCKS server to use configured as a single IP-address and port number combination. While it could be possible to create a SOCKS client implementation that allowed multiple SOCKS server addresses to be specified, and based on this handle redundancy in the client, this is not currently supported by the Dante SOCKS client, and might not be supported by other implementations.

In practice, both fault tolerance and load balancing will typically be handled at the server side, giving the SOCKS client a single IP-address and port number number to connect to, with multiple Dante servers answering requests to the shared address, removing the need for any special support for redundancy in the client.

This currently gives the following possibilities for adding redundancy to Dante:

Process-level redundancy: A certain degree of fault tolerance can be introduced at the process-level by having multiple Dante servers run on the same machine, all accepting new clients. If any one of the Dante servers terminate for some reason, the other Dante servers running on the same machine can continue to handle new clients.

Layer 2 shared IP failover fault tolerance: Multiple machines can share the same IP-address, with one machine being the primary server and a set of one or more additional backup servers from which a new primary server will be selected to take over if the machine that is the primary server becomes unavailable in some way.

Layer 2 shared IP load balancing: A cluster of nodes can share the same IP-address. All traffic is received by all nodes, with a hash algorithm used to determine if a node should drop or process any given packet.

Layer 3 redirection: Traffic to a shared Dante server IP-address can be redirected to different machines at the packet level. This can be used to run multiple Dante servers on different machines, with client requests redirected among these machines based on what type of redundancy is wanted and both fault tolerance and load balancing configurations possible. Can typically be done on the kernel level.

Layer 4/7 redirection: Similar to layer 3 redirection, but with an application serving as the endpoint for each TCP connection/UDP session forwarding traffic to multiple Dante servers.

Compatibility and limitations

Replacing a single Dante server with multiple servers in a fault-tolerant or load-balanced configuration introduces extra complexity and potentially changes the behaviour of client/server interaction, depending on how the redundancy is implemented.

SOCKS session state and Load Balancing Algorithms

A load-balancing system potentially has more complexity than a fault-tolerant system. The latter can be implemented with two nodes with identical configurations, where only one is active at a time, while the former will have multiple nodes that are active at the same time, potentially with the Dante servers bound to different IP-addresses.

Sufficient information to handle the increased complexity of load-balanced systems is exchanged between the client and server as part of SOCKS protocol negotiation, but it can still be useful to be aware of how these interactions occur when creating a load-balanced Dante configuration.

A load balancing system will typically have a pool of server machines available, and when a client request is received it is forwarded to one of these machines.

The algorithm used to choose which server to forward to can be implemented in many different ways, with one important characteristic being whether it will consistently forward all requests from a given client to the same SOCKS server, or if requests from a given client can be forwarded to different SOCKS servers.

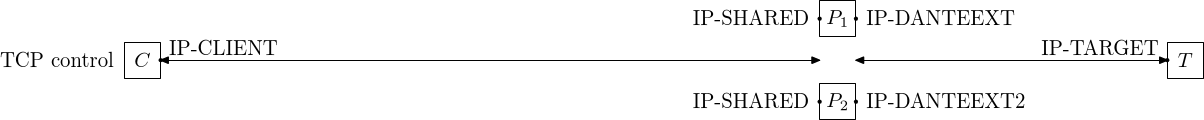

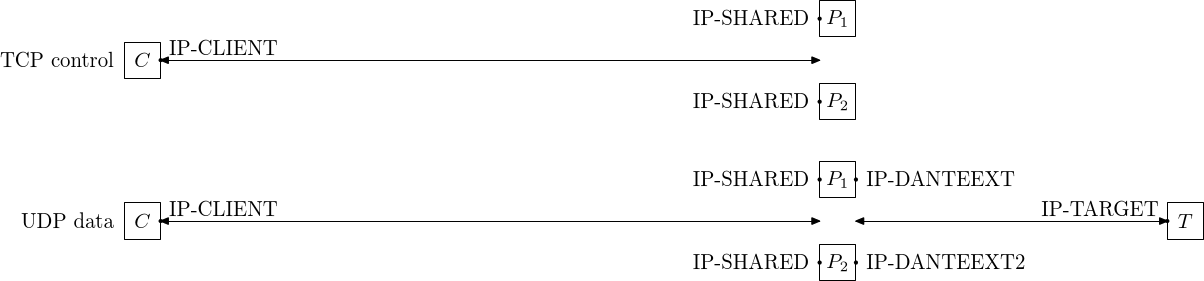

The behaviour of the commands in the SOCKS protocol are illustrated in this document using images with keywords having the following definitions:

- IP-CLIENT - IP-address of the SOCKS client.

- IP-SHARED - IP-address seen as SOCKS server address by SOCKS client.

- IP-DANTE - Internal IP-address of the first Dante server, when different from IP-SHARED.

- IP-DANTE2 - Internal IP-address of the second Dante server, when different from IP-SHARED.

- IP-DANTEEXT - External IP-address of the first Dante server.

- IP-DANTEEXT2 - External IP-address of the second Dante server.

- IP-TARGET - Address of the target server. This is the address of the remote server the SOCKS client wants to reach via the Dante server.

- IP-RELAYEXT - External relay address, when a layer 4 relay is used.

All commands in the SOCKS standard first open a TCP control connection, which for the CONNECT and BIND commands is also used for forwarding data. For UDP traffic there is in addition to the TCP control connection also a separate UDP socket established with the UDP ASSOCIATE command, used to forward UDP data.

In more detail, the relevant behaviour for commands in the SOCKS standard is as follows:

CONNECT - Used to request outgoing TCP connections. The same TCP connection is used for both the SOCKS request negotiation and the subsequent data forwarding. The socket endpoints of one possible load balancing configuration is illustrated in the figure above.

CONNECT commands can be used with any load balancing algorithm since there is no need to reuse the same Dante server or IP-DANTEEXT address for subsequent requests from the same client, unless this type of limitation is imposed by the application issuing the CONNECT command.

BIND - Used to receive incoming TCP connections, with the port bound on the external side of the SOCKS server. As with CONNECT, the same TCP connection is used for SOCKS negotiation and data forwarding (after a connection is received on the bound port). The socket endpoints are the same as for the CONNECT command in the image below.

The address bound on the external side of the SOCKS server is independent of the address used to receive client requests, and it is reported to the client as part of the protocol negotiation, along with the address of the remote peer if it connects to the bound port.

As with CONNECT, there is no special state retained on the SOCKS server for BIND apart from the TCP connection from the client, so the BIND command should work with any load balancing algorithm, but do note: the external address of the SOCKS server is reported to the client, so with any load balancing algorithm that does not consistently use the same server for requests from the same client, the client application will potentially see different addresses reported for different requests. This might be a problem for some applications.

This is illustrated in the following diagram showing the combined TCP control and data connection:

UDP ASSOCIATE - Used to send and receive UDP traffic, this command uses a TCP connection for the initial SOCKS negotiation, and the Dante server binds a port on the client side for exchanging UDP traffic with the client. An UDP port is also bound on the Dante server's external interface for exchanging data with the target server or servers. Both the TCP control connection and the UDP ports need to exist on the same machine. Data forwarding will continue as long as the TCP control connection is active.

The Dante server reports the IP-address and port for the client to send UDP data to to the client, and if this address is an IP-address shared between multiple Dante servers, the load balancing algorithm needs to use a source IP-address based hashing algorithm to ensure that both the TCP control connection and the UDP data end up at the same machine.

This is illustrated in the following diagram, showing both the TCP control connection and the UDP data connection:

In the case of UDP traffic, another factor to consider is the extent to which the actual Dante server nodes are visible to the SOCKS clients. The shared address is used for the TCP control connection, but the UDP ASSOCIATE command will return the internal address of the Dante server (IP-DANTE/IP-DANTE2) to the client, and the client will subsequently send UDP packets to this address. The load balancing system thus must be able to handle this address being reported to the clients if UDP via Dante is to be supported.

An alternative approach may be to have a relay server inspect the SOCKS control data and rewrite the UDP address returned to the client. We do not know of any relay servers that have support for this, though one could presumably be written.

Redirection and load balancing considerations

For redirection based load balancing, it can be useful to first examine some potential motivations for wanting to do this.

One potential problem with a master/backup failover system is that the backup node might not be working correctly when the master fails, if it is rarely used. In contrast, one way to think of load balancing is as a redundant failover system, where the backup node is constantly in use. Having all nodes active at all times makes backup node reliability problems unlikely, since an incorrectly configured or failing backup node will be quickly detected: one possible motivation for using load balancing can be to reduce the chance of all units failing at the same time.

Another typical motivation for using load balancing is to improve performance. For example, in a scenario with a web or database server receiving requests that require significant processing time, load balancing can improve performance by spreading the load over multiple machines. The bottleneck in this type of scenario might be the CPU or the HDD, and scalability can be improved via parallelisation by adding additional machines.

For a Dante server, the primary operation that is being performed is data forwarding. This might involve CPU intensive operations such as encryption, if the data sent between the SOCKS client and Dante is being encrypted, but apart from this it will primarily consist of reading data from one socket and writing it to another, which can be done quite efficiently.

The potential bottlenecks in the Dante server nodes must also be seen in relation to the node performing the redirection.

If, for example, the redirection node can transmit data at 1~Gbit/s, and the network interface card (NIC) at the Dante nodes can only transmit data at 100~Mbit/s, then load balancing will work in the same way as in the web/database server scenario. The only way to achieve a total data rate through the redirection node at full speed is to spread the traffic over multiple nodes; ten 100~Mbit/s Dante nodes combined should theoretically be able to achieve total data rates near 1~Gbit/s, assuming that data intensive client sessions and traffic is equally spread among the Dante server machines.

However, if the Dante server machines have NICs that can achieve transfer rates identical to the redirection node, the situation becomes different. If each Dante server node can potentially achieve the same rate as the redirection node, the redirection node becomes the primary bottleneck. There might however still be benefits from using load balancing, since the following bottlenecks might exist in Dante, or the machines running Dante:

The networking cards - Desktop/cheaper networking cards might not be able to sustain transfer rates at the maximum link speed.

The CPU, bus capacity/copy overhead - Various subsystems might limit the data rates possible on a machine when data forwarding is performed by a user-space application.

OS overhead/switching - Scalability limitations in the operating system; the performance achievable by each client session might be reduced if the machine has many processes or sockets.

Application overhead - Scalability limitations in Dante; the per-client session performance might fall when there are many clients or data is transmitted at high data rates.

HDD - If verbose logging is enabled in the Dante server, writing of logs to disk might become a bottleneck.

Depending on the Dante server hardware, these potential bottlenecks might only become visible at high data forwarding rates and will typically become visible in the form that e.g., two smaller TCP IP packets sent by the SOCKS client to Dante will be forwarded as one larger TCP IP packet by Dante.

For a layer 3 redirection based load balancing system, the overhead of data forwarding can likely be assumed to be lower than for an application running in user space. There will for this reason be a possible gap between the data forwarding rates achievable at the redirection unit and the Dante server machine, which would mean that there would be a potential benefit from using load balancing. Compared to the web/database server scenario however, these benefits might not be as significant. There might only be a difference in performance if there are very high numbers of simultaneous clients, or very high total data rates. The added costs and complexity of having extra machines might in many cases not be worth it, compared to using a single more powerful machine with a server-grade NIC.

If improving performance is the primary motivation for doing load balancing, performance testing should be used to verify that there is an actual improvement that is worth the added complexity.

Multiple servers and external address variation

As noted above, if each redundant Dante server uses different external addresses, compatibility problems might arise for some applications.

For example, FTP is an application that in some modes need to perform multiple SOCKS command operations in sequence (CONNECT, then BIND) when using a SOCKS server, and some implementations might fail if the external address changes between operations. This is primarily a potential problem for load balancing systems, and can be avoided if the same SOCKS server is used consistently for requests from the same SOCKS client. Load balancing systems will typically have a way to select a source address based hash algorithm that gives this behaviour.

If possible, using a source IP-address based hash algorithm is recommended for load balancing systems (and not a IP-address + port number algorithm). This might reduce the benefits of load balancing if the load is very uneven, with most of the traffic coming from a clients at a small number of IP-addresses, but unless it is known that there will be no client compatibility problems from using a different hashing algorithm, this approach reduces the possibility of unexpected client application failures.

GSSAPI Authentication and redundancy

For both shared IP and redirection techniques, whether they are used for fault tolerance or load balancing, the client will see the servers as having a single IP-address, while the Dante servers might actually be bound to different IP-addresses and running on different machines.

When GSSAPI authentication is used, the server identity seen by the client must match that of the shared IP-address the client communicates with, which means that the service name of all Dante servers must match that of the shared IP-address, even if the shared IP-address is an address that does not exist on the machine Dante is running on.

For example, assume the principal is rcmd/dante.example.com@EXAMPLE.COM, with dante.example.com having the IP-address 10.0.0.1. Depending on how the Dante server redundancy is implemented, the Dante servers might either all be bound to and receive client requests at this address, or they might all have different addresses. Even if the correct address is bound to, this might only be the address used for the load balancing system, with the actual IP-address and hostname of the machine being different.

To ensure that all Dante servers use the correct hostname during GSSAPI authentication, the gssapi.servicename keyword can be used in the relevant client pass rule to specify the shared server identity:

gssapi.servicename: rcmd/dante.example.com

The keytab file should also be the same on all hosts, for example:

gssapi.keytab: /etc/sockd.keytab-carploadbalance

A full 'client pass' rule might look like this with both keywords specified:

client pass {

from: 0.0.0.0/0 to: 0.0.0.0/0

log: error connect disconnect

gssapi.keytab: /etc/sockd.keytab-carploadbalance

gssapi.servicename: rcmd/dante.example.com

}

Here, the address dante.example.com is expected to resolve to the shared IP-address, and for the server keytab to contain the correct Kerberos principal: rcmd/dante.example.com

For additional details on setting up GSSAPI authentication in Dante, please see the GSSAPI authentication page.

A full sockd.conf file configured for GSSAPI authentication can look like this:

errorlog: syslog

logoutput: /var/log/sockd.log

debug: 0

internal: carp0 #loadbalance interface

external: 10.0.0.1 #external address

socksmethod: gssapi

user.privileged: root

user.notprivileged: _sockd

client pass {

from: 0/0 to: 0/0

log: error connect disconnect

gssapi.keytab: /etc/sockd.keytab-carploadbalance

gssapi.servicename: rcmd/carploadbalance.example.com

# clientcompatibility: necgssapi #might be needed for some clients

}

socks pass {

from: 0/0 to: 0/0

log: error connect disconnect

}

SOCKS session state and failover limitations

The failover mechanism in a fault-tolerant system will typically trigger upon machine or process failure. This will result in loss of all active sessions, here being all active TCP connections and UDP associations. Sessions will need to be reestablished on the backup Dante server for data forwarding to continue.

For some operating systems, it might be possible to create a mechanism in Dante that allows session recovery in case of machine failure, but this is not currently supported.